Scenario: You slaved over a training presentation for the team. You brought your best PowerPoint animations, p-values, and stated how important this data was to the organization. Everyone rated the webinar as the ‘best ever’ [it was an option on the smile sheet you created]! It was obviously a winner of a training event. Week later, the team is not doing what you told them to do in the training. Why? Were they multi-tasking on the call? Are they just too busy? There are many possible reasons but without knowing if the audience walked away from the event with the information you hoped to instill you are dead in the water. What about a post-even quiz?

Quizzing learners during or at the end of a training event (live, virtual, or on-demand) is a great way to assess knowledge transfer. For a variety of reasons, quizzes are often composed of multiple-choice questions (MCQs) and it’s probably no surprise that multiple-choice tests (MCTs) are the most widely used format for measuring knowledge, comprehension and application outcomes.

You’ve likely taken hundreds, if not thousands, of multiple-choice tests in your life. Anyone remember their SAT score? Standardized tests are created to by psychometrically strong. Psycho – what? Psychometrics is the discipline concerned with constructing assessment tools, measurement instruments, and formalized testing models. Many tests are composed solely of MCQs. MCTs allow efficient coverage of a great deal of information and the tests can be written to assess various learning outcomes – from basic recall to data evaluation. However, there are limits to the types of knowledge that can be tested with MCTs. For example, MCTs are not a strong choice for evaluating someone’s ability to organize thoughts or articulate ideas, both of which are key in Medical Affairs.

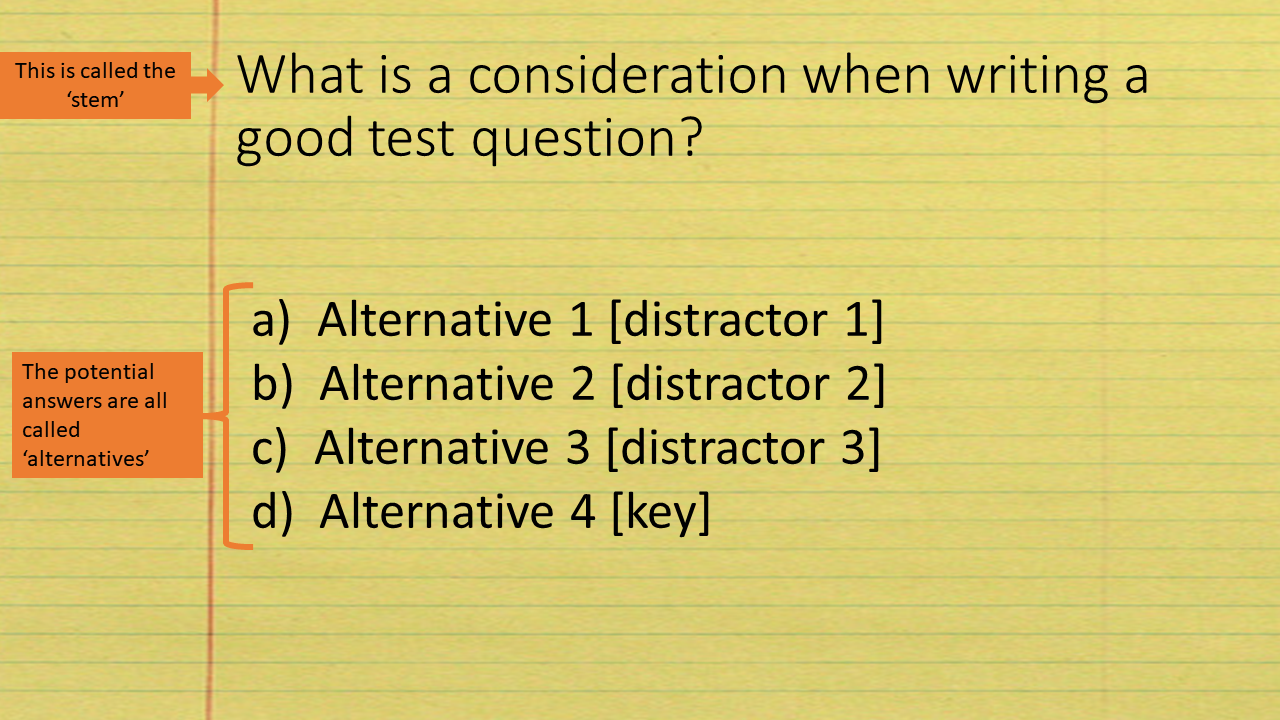

In an MCT, there is a stem (question) and set of alternatives (answers). The stem is the clearly stated problem that you are testing. The correct or ‘best’ answer is referred to as the ‘key’ and all other alternatives are called ‘distractors.’

A good stem is written so that the test-taker can answer the question without having read the potential answers. Without even having read the potential answers, you say?! Below are 6 tips for improving stem writing for your smarty medical affairs team.

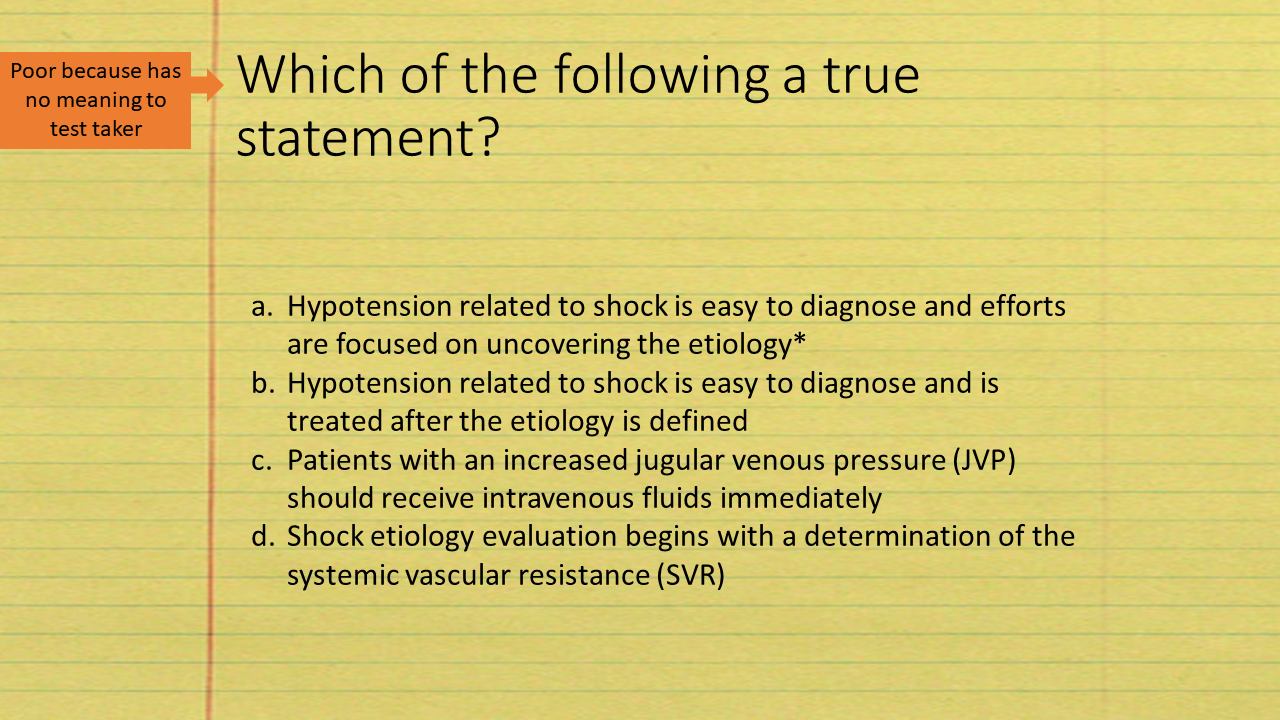

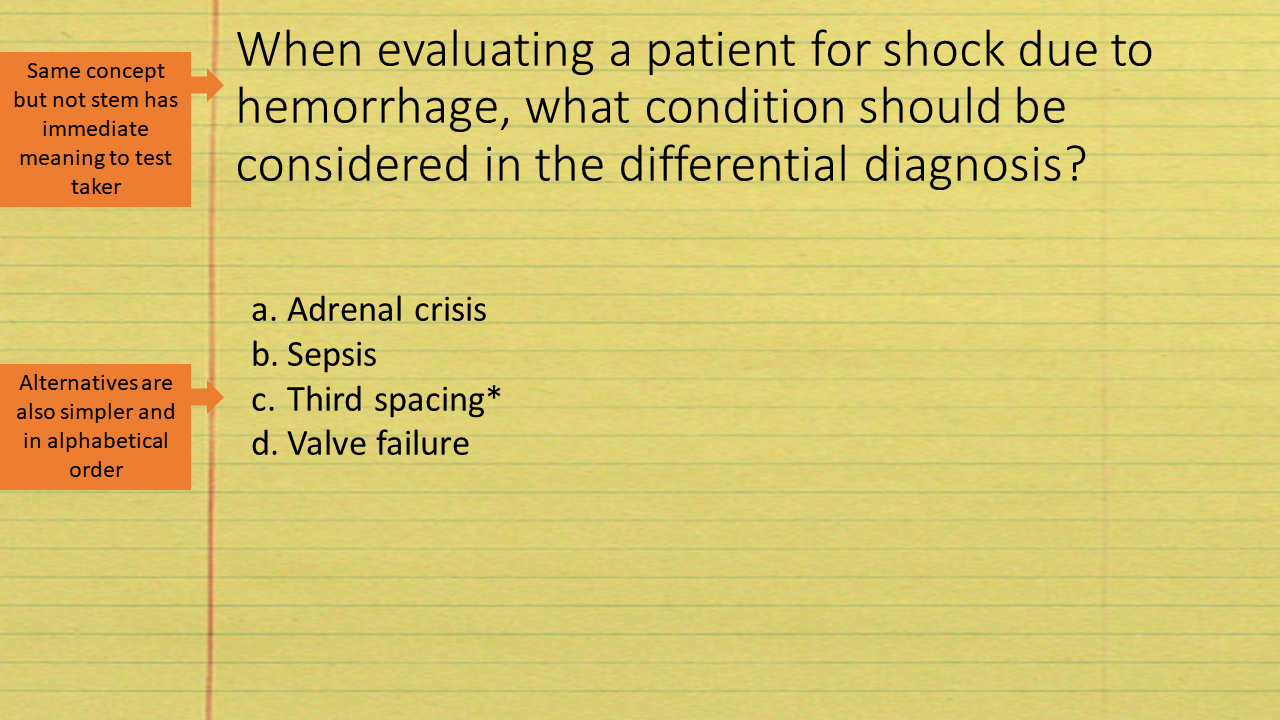

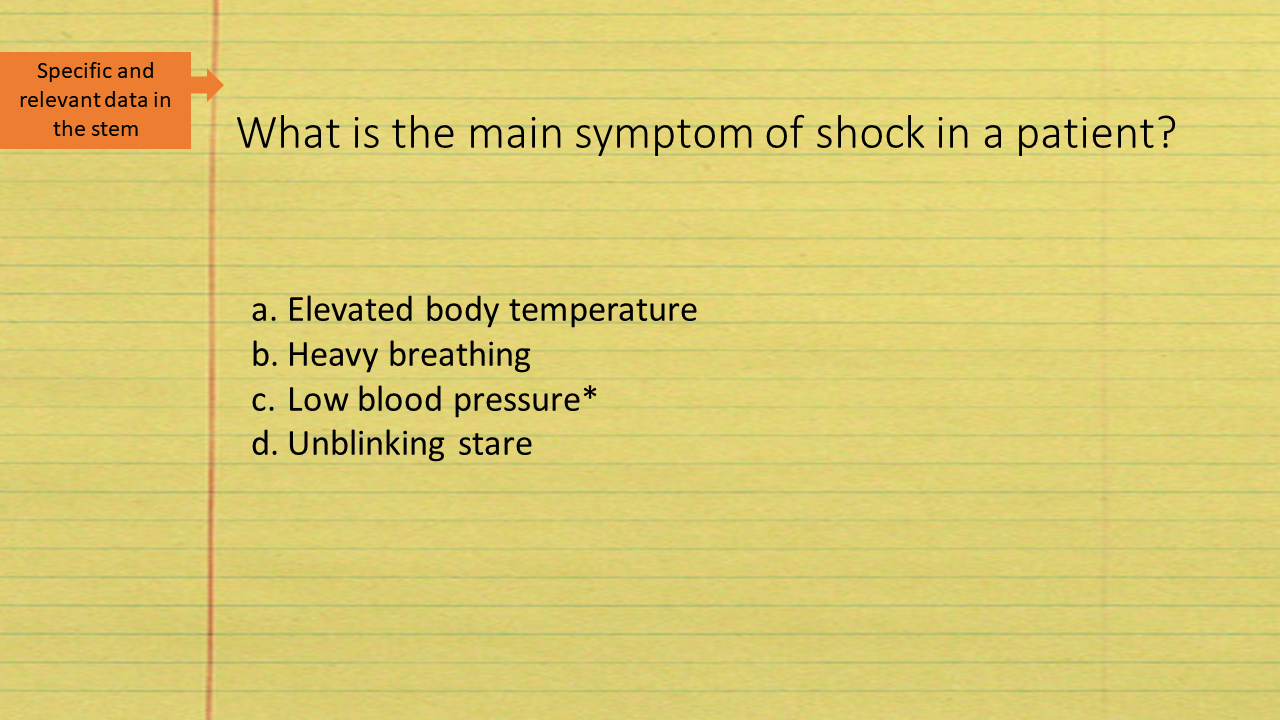

- Meaningful. The stem should be meaningful when the test taker reads it. The question must represent a topic or concept that was presented during the training. The test cannot be used to evaluate an examinee’s ability if they are not tested on information they were expected to learn during the training. Ideally, the stem communicates a clear purpose and intent.

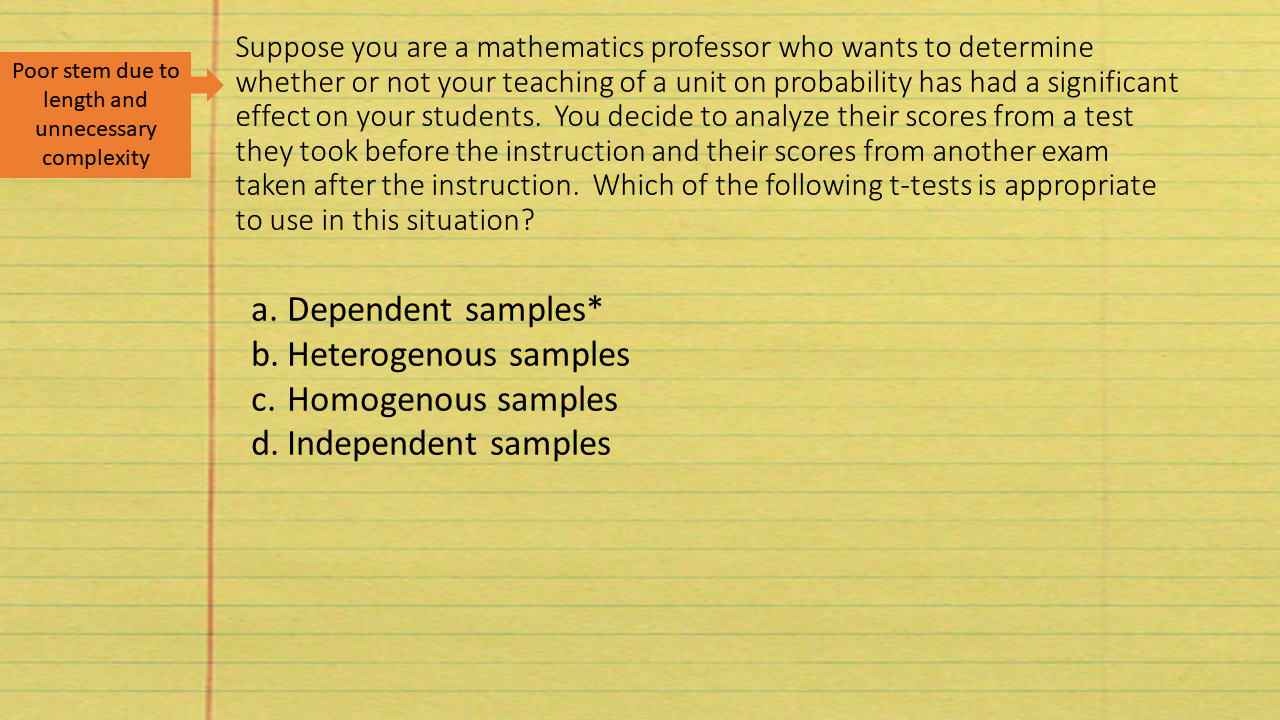

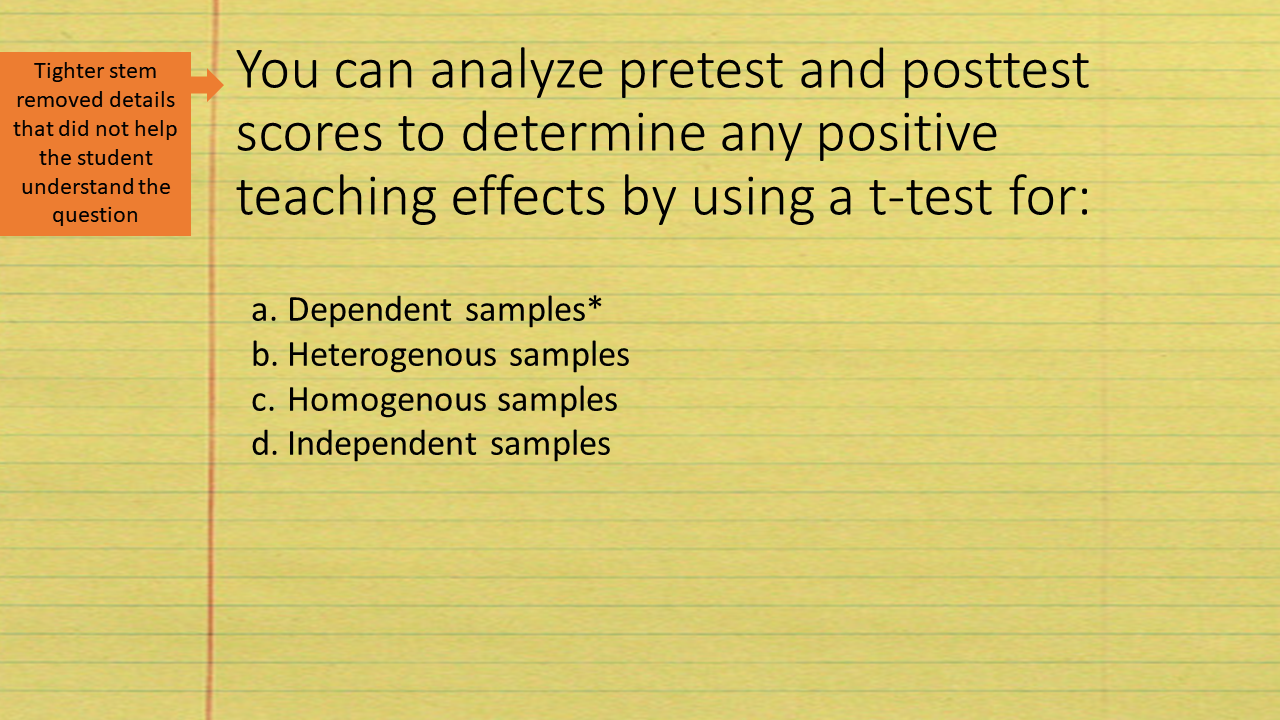

- Concise. Directions included in the stem should be clear and avoid excess words. Test questions should be edited for brevity. Choose your words carefully. Get to the point in a way that the audience can easily comprehend. If a scenario is part of the question, it should be required to answer the question. Adding in extra information to ‘teach’ is not psychometrically valid. Write in simple terms. For example, don’t use ‘commence’ when ‘begin’ is what you mean. Keep the thesaurus ready for that novel you keep meaning to write!

- Relevant. The stem should not contain irrelevant information that would decease the ability of the test taker to understand what is being asked.

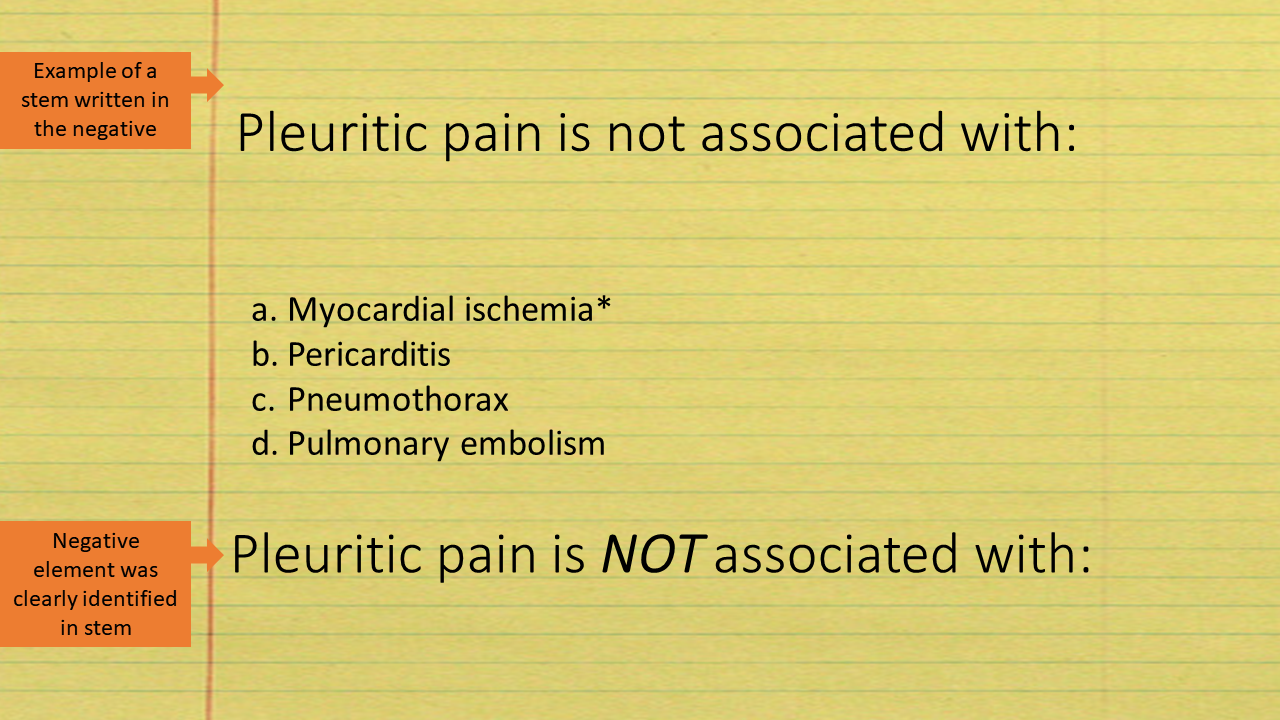

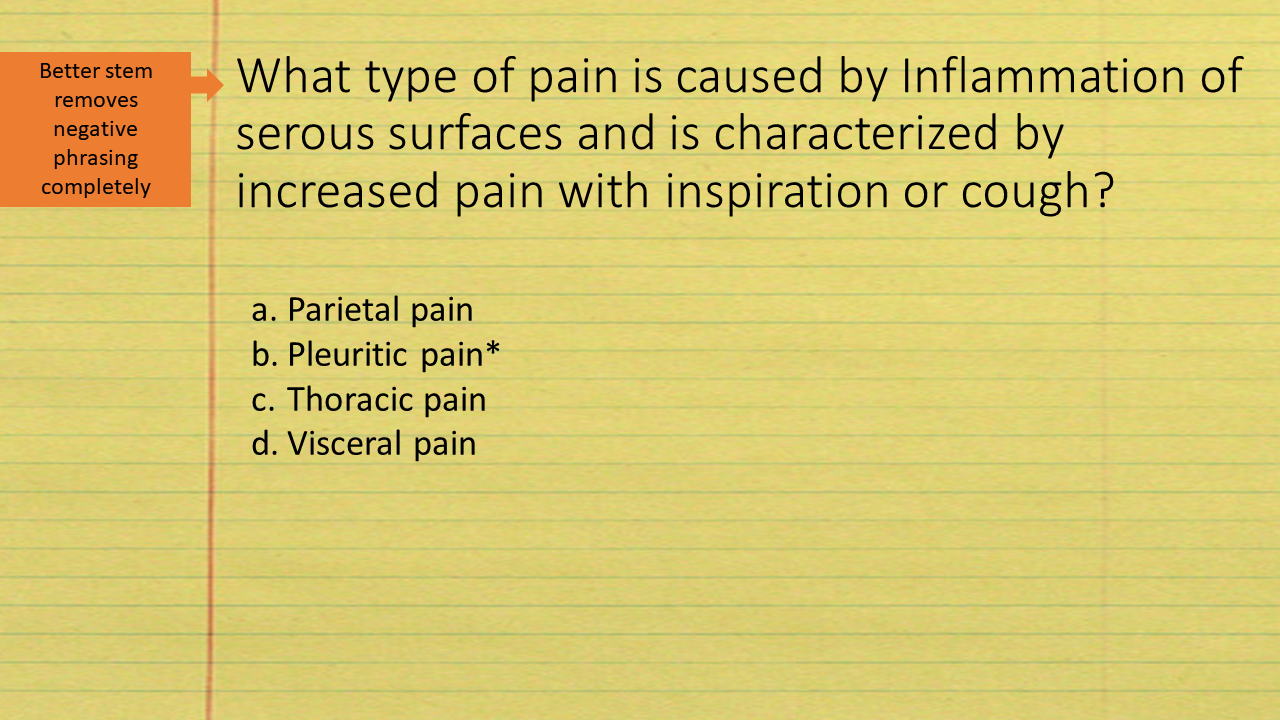

- Positive. Negative phrasing is often difficult to understand, and 31/35 testing experts recommend avoiding negative questions. When the stem is written in the negative, it takes additional time to read and understand the question. Unfortunately, negatively worded questions are usually quicker and easier to construct, and therefore continue to be used. If writing a question in the negative is required based on the content, then the negative element of the question should be made very clear by adding bold, italics, and CAPITALIZATION.

The question itself does not require that the question be written in the negative, so it should be rewritten as:

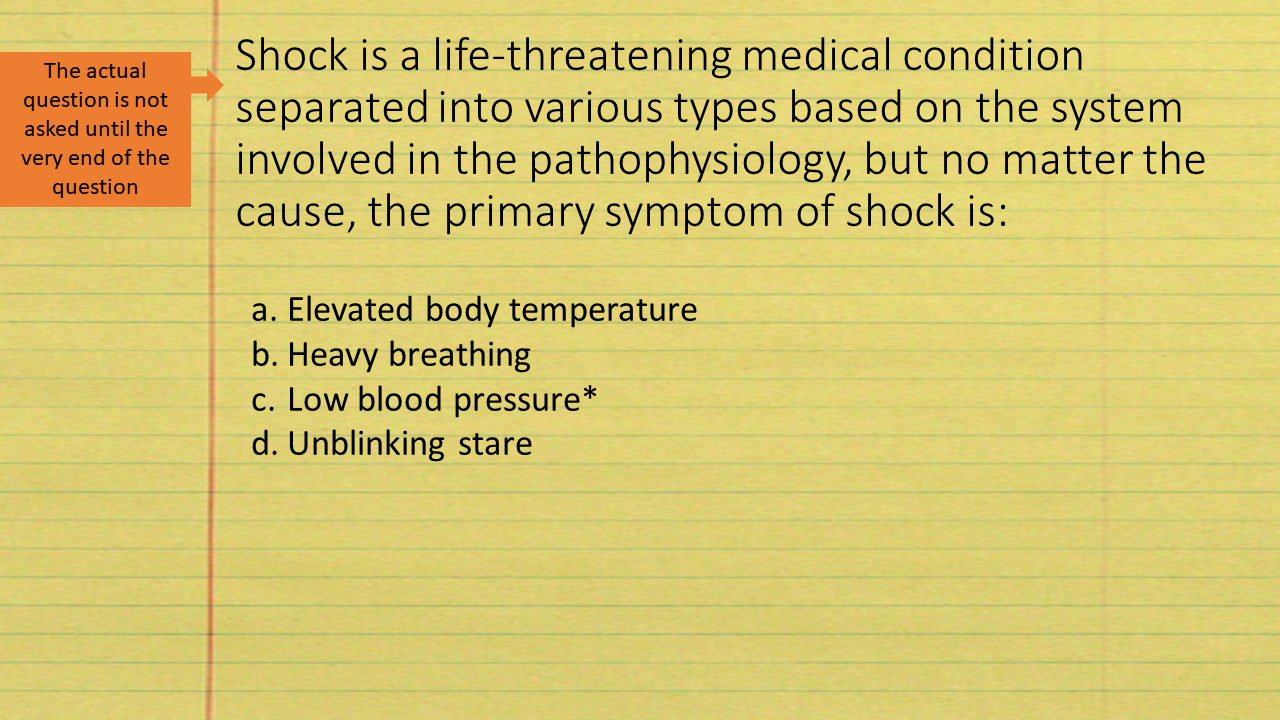

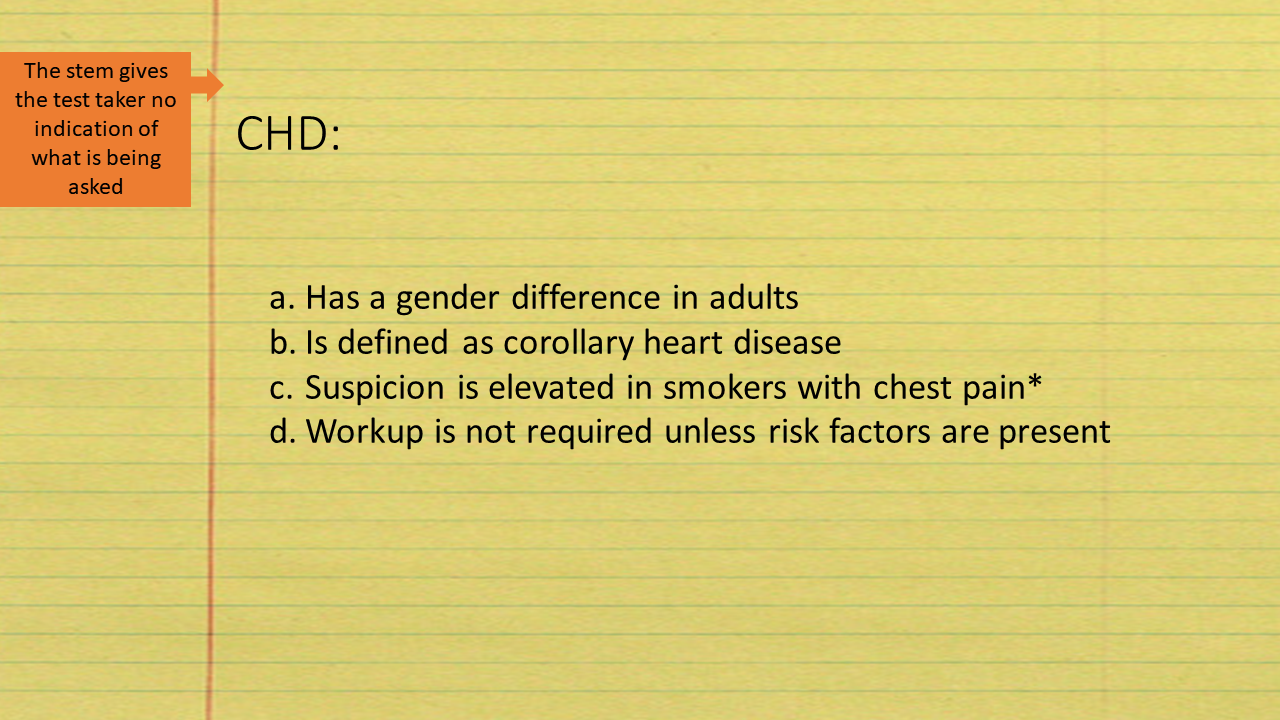

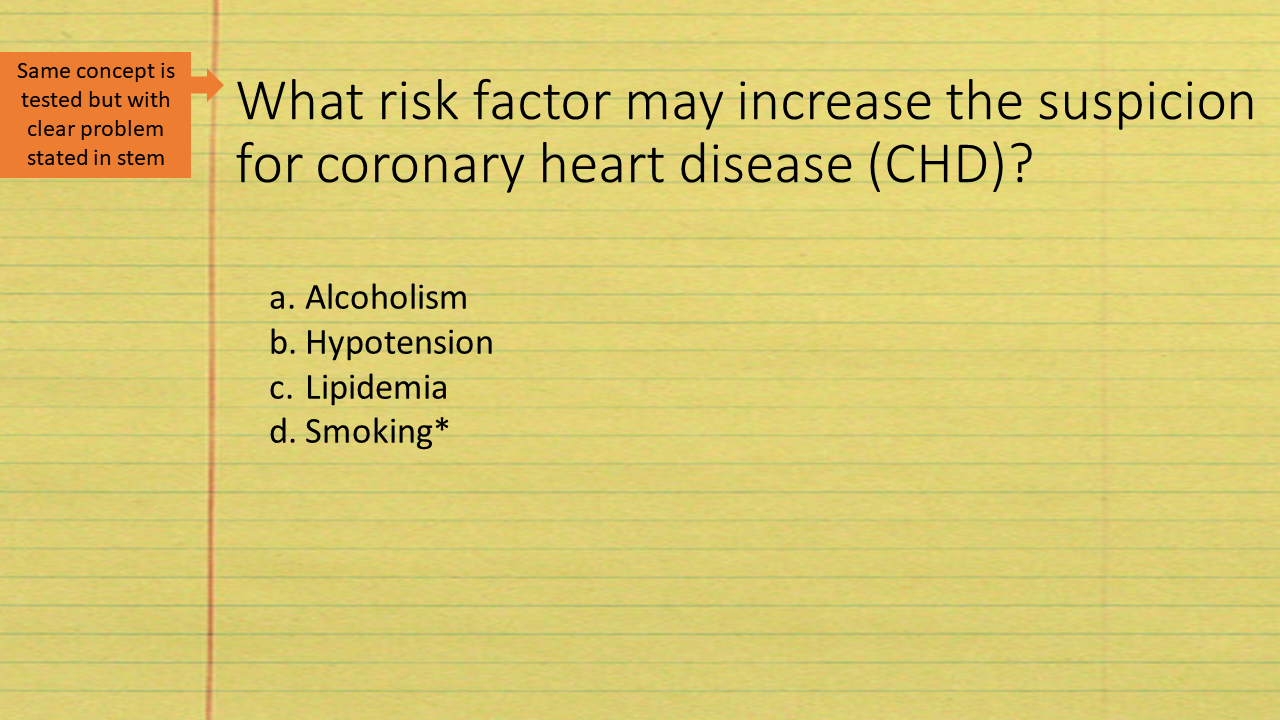

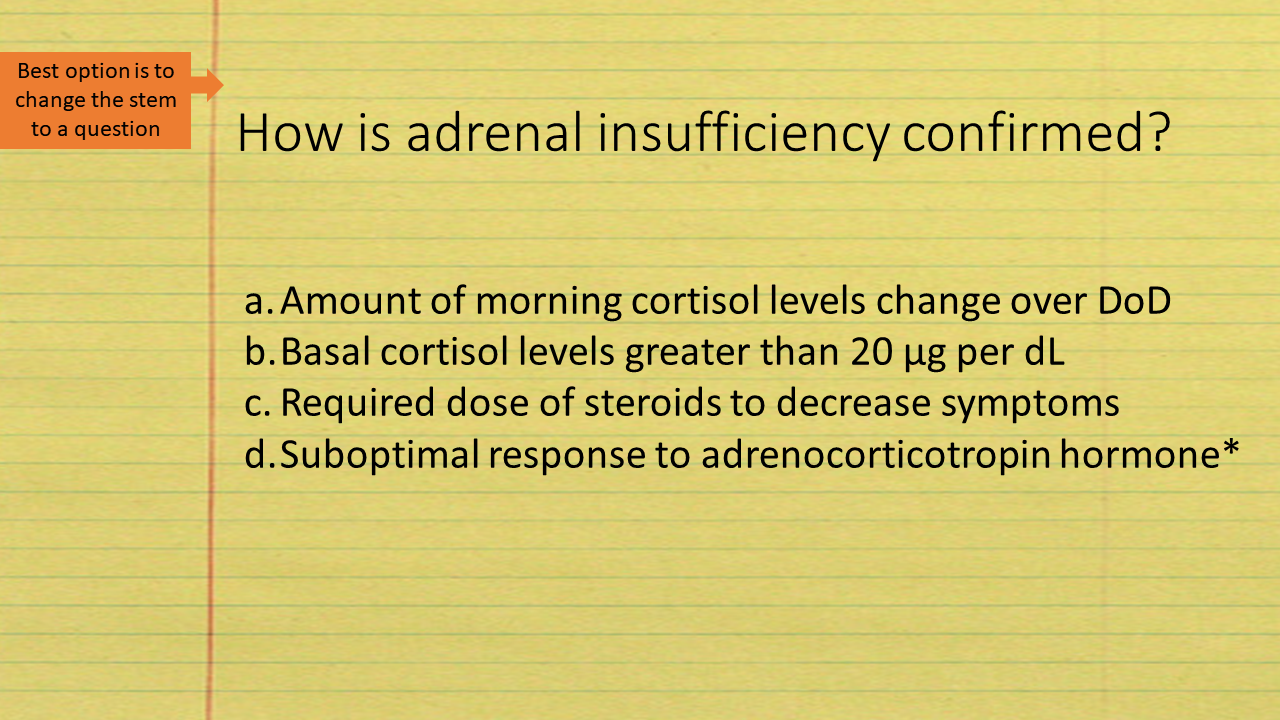

- Clear. Along with writing a question stem that is concise, the test maker should also create questions that are as clear as possible. An unfocused stem does not make it clear to the test taker what is being asked and requires that each answer option be carefully reviewed before a selection can be made. Alternatives should not be a series of independent true or false statements.

In this example, the stem does not make it clear to the test taker what concept is being tested until all four of the alternatives are read and evaluated. This eats up time during the test, even if the question is not meant to be difficult.

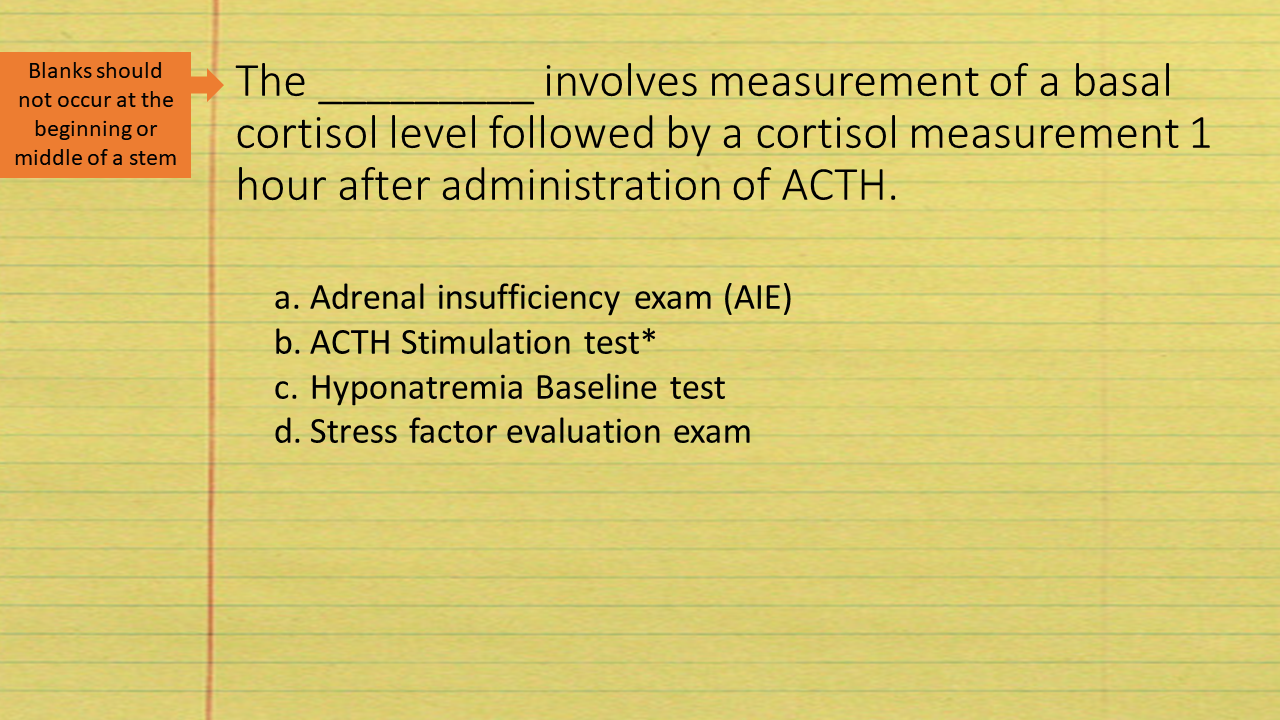

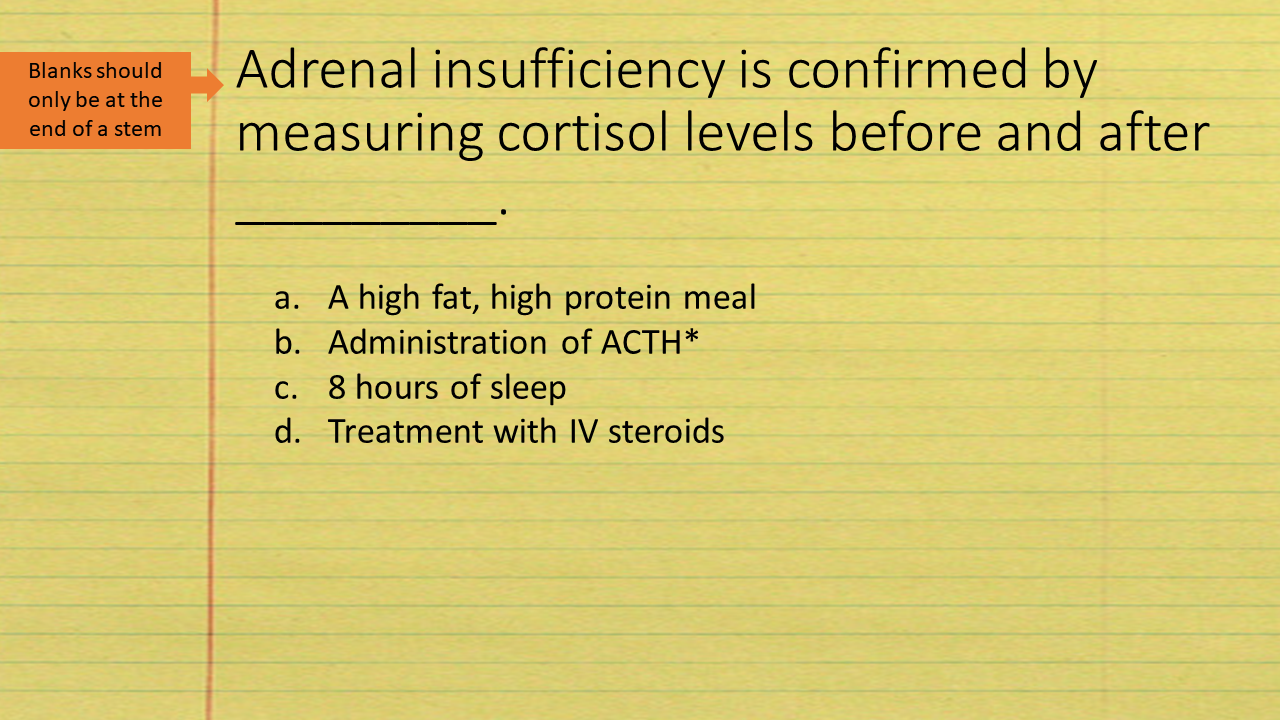

- Fill in the blank. Psychometricians (those crazy folks that build a life around psychometrics) generally frown upon fill-in-the-blank questions. If you are determined to use one, the blank should not fall at the beginning or middle of the stem. Cognitive load is unnecessarily increased with an initial or interior blank space.

Ideally, the stem should be written in either question or completion format for best conformation to standards.

Examinees and examiners frequently perceive MCQ-based testing as ‘unfair’. This is for several reasons. Critics of MCQs argue they only test regurgitation of factual material. However, MCQs can be written to assess higher-order domains by asking learners to interpret facts, evaluate situations, explain cause and effect, make inferences, and predict results. It’s just harder to write those types of questions and so they are rare.

Real world problem solving differs from selecting a solution from a set of alternatives, but efforts should be made to assess levels 5-6 of Bloom’s Taxonomy via MCQs. Medical Affairs employees use factual knowledge from various sources to identify the best way forward in a situation and MCTs do not easily test that ability. MCTs are not the best option for demonstrating critical analysis and demonstrating the ability to communicate knowledge. Alternative testing methods are best employed for those qualities. Interestingly, test takers have less issues with the lack of real world applicability, and usually consider MCTs unfair due to internal bias and item writing failures.

You may have experience with test questions that are intended to ‘trick’ the test taker. Let’s face it – we’ve all been exposed to poorly written tests over our test-taking lifespan. Test writing should not be about tricks. It is true that writing a solid set of MCQs is time consuming and resource intensive. Some estimates conclude that it takes 1 hour to write a strong multiple-choice question! When creating a quiz is just one task of many on your plate, that’s an impossible expectation! So, when time is tight, and the quiz is needed immediately, use the tips above to write question stems that are clear and strong. With a little bit of time and effort most test questions can be rewritten to conform to these 6 psychometric best practices.

There are guides and workshops focused on writing good test questions and some universities now send new faculty to multi-day workshops to hone their test writing skills. In general, those of us training medical affairs colleagues are on our own, so contribute to the discussion and add in your own best practices and barriers in the comments section below.

References:

Zimmaro, D.M., Writing Good Multiple-Choice Exams. 2016, University of Texas at Austin. p. 1-42.

Lai, H., et al., Using Automatic Item Generation to Improve the Quality of MCQ Distractors. Teach Learn Med, 2016. 28(2): p. 166-173.

Tarrant, M., et al., The frequency of item writing flaws in multiple-choice questions used in high stakes nursing assessments. Nurse Educ Pract, 2006. 6(6): p. 354-363.

Rodriguez-Diez, M.C., et al., Technical flaws in multiple-choice questions in the access exam to medical specialties (“examen MIR”) in Spain (2009-2013). BMC Medical Education, 2016. 16.

McCoubrie, P., Improving the fairness of multiple-choice questions: a literature review. Medical Teacher, 2009. 26(8): p. 709-712.

Brame, C.J. Writing Good Multiple Choice Test Questions. [Blog] 2013 [cited 2018 30 May]; Available from: https://cft.vanderbilt.edu/guides-sub-pages/writing-good-multiple-choice-test-questions/.

Center for Teaching and Learning. 14 Rules for Writing Multiple Choice Questions. [Document] [cited 2018 June 1]; Available from: https://valenciacollege.edu/faculty/development/coursesResources/.